Here at WebHeads United, we have observed a distinctive shift in the trajectory of human computer interaction. We have moved beyond the limitations of syntax and rigid command structures into an era of fluid, generative conversation. However, this technological leap has revealed a profound psychological precipice known as the uncanny valley. It is no longer merely a visual phenomenon reserved for wax figures or early robotics; it has permeated the cognitive architecture of Large Language Models (LLMs).

When an artificial agent demonstrates linguistic competence that rivals a human but lacks the requisite cognitive grounding or emotional authenticity, it triggers a deep seated dissonance within the user. This is not a failure of intelligence, but a failure of alignment. The user anticipates a machine or a human, but encounters an entity that occupies the liminal space between the two, a space that generates mistrust rather than engagement. At WebHeads United, we recognize that navigating this terrain is the most critical challenge in modern AI persona development. We must understand the uncanny valley not as a barrier to be broken, but as a boundary to be respected to ensure data integrity and user trust.

The Origins: From Robotics to Linguistics

To understand what is happening with AI today, we have to look back at where the idea came from. The concept of the uncanny valley was first introduced by a Japanese robotics professor named Masahiro Mori in 1970. At that time, computers were huge machines that filled entire rooms, and robots were simple mechanical arms used in factories. Nobody was having a conversation with a computer back then.

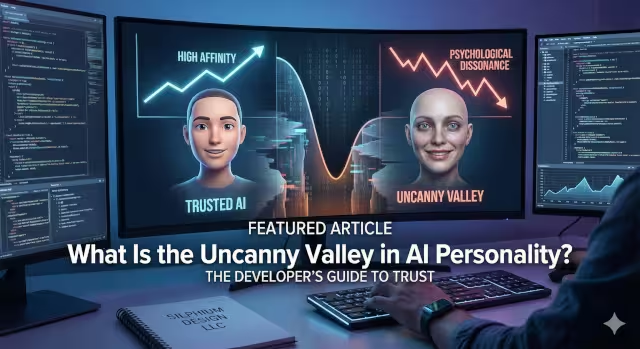

Mori noticed something very interesting about how humans react to robots. He drew a graph to explain it. On one side of the graph, you have “human likeness,” which means how much the robot looks like a person. On the other side, you have “affinity,” which is a fancy word for how much we like or trust the robot.

Mori found that as a robot starts to look more human, we tend to like it more. We think a robot with a head and two arms is cute. We like it more than a factory arm. But there is a catch. As the robot becomes almost human, but not quite perfect, our feelings drop like a rock. We suddenly feel creeped out or scared. This dip in the graph is called the uncanny valley.

For decades, people thought the uncanny valley only applied to how things looked. They thought about zombies, prosthetic hands, or bad computer animation in movies. But now, the uncanny valley is appearing in text and voice. It is not about how the robot looks anymore. It is about how the artificial intelligence speaks, thinks, and acts. When a chatbot tries too hard to be your best friend but forgets your name five minutes later, you fall right into the uncanny valley.

What Is the Uncanny Valley in AI Personality?

So, what is the uncanny valley when we are talking about personality? It is the feeling you get when an AI sounds smart and human, but something feels “off.” It is a mismatch. Your brain expects one thing, but the AI gives you something else.

Imagine you are texting a friend. You know their style. You know they make typos sometimes. You know they have feelings. Now, imagine you are talking to a customer service bot. The bot uses slang words like “cool” and “awesome” and puts emojis in every sentence. But then, you ask a complicated question, and it gives you a robotic, pre-written legal answer. That sudden switch is jarring. It breaks the illusion. That feeling of weirdness is the uncanny valley in AI personality.

We see this often with “hollow empathy.” This happens when an AI is programmed to say sorry, but it does not understand what sorry means. If you tell a chatbot that your dog died, and it instantly replies with a generic “I am deeply saddened by your loss” followed by “How can I help you with your billing today?” you feel repulsed. A human would not switch topics that fast. A machine that acts like a feeling human but processes data like a cold calculator sits at the bottom of the uncanny valley.

Psychological Mechanisms: Why We Recoil

Why do we hate the uncanny valley so much? Why does it make us feel scared or angry? Scientists and psychologists have a few ideas about this. It all comes down to how our brains are wired to keep us safe.

One major theory is called “Pathogen Avoidance.” This is an evolutionary instinct. Thousands of years ago, if you saw a person who looked mostly normal but moved in a jerky, strange way, your brain might think they were sick. They might have a disease that you could catch. So, your brain sends a signal to stay away. When an AI mimics a human perfectly but makes a small mistake in tone or logic, your brain treats it like a sick person. It triggers that ancient fear.

Another reason is “Cognitive Dissonance.” The human brain likes to put things in boxes. We have a box for “Human” and a box for “Machine.” We treat humans with emotion and machines with logic. The uncanny valley confuses our sorting system. When an AI tells a joke but has no face, or uses a warm voice but forgets what you just said, your brain cannot decide which box to put it in. This confusion creates a feeling of anxiety. We do not like things that we cannot categorize. We want the AI to either be a clear robot or a real human. The uncanny valley is the scary middle ground where we cannot decide what is real.

Signs Your AI Has Entered the Valley

When developing personas, you have to watch for specific signs that an AI is falling into the uncanny valley. There are clear red flags that developers and users can spot.

The first sign is inconsistency. This is very common. An AI might start the conversation speaking like a college professor, using big words and long sentences. Then, suddenly, it switches to speaking like a teenager. This happens because the AI has read the whole internet, and it gets confused about who it is supposed to be. Humans do not change their entire personality in two seconds. When an AI does this, it feels fake and creepy.

Another sign is the “Always Happy” syndrome. Humans have a wide range of emotions. We get mad, sad, bored, and tired. Many AI personas are programmed to be polite and happy 100% of the time. If you yell at the AI, and it responds with a cheerful “I am happy to hear that!” you are experiencing the uncanny valley. It feels like talking to a person who has been brainwashed. It is unnatural.

Latency, or the time it takes to answer, is also a factor. In a voice conversation, if you ask a question and the other person waits four seconds of total silence before answering, it feels awkward. But if they answer instantly, before you even finish your sentence, that feels robotic. To avoid the uncanny valley, the timing has to be perfect. It has to have the natural rhythm of a real chat.

Another sign is aggressive intimacy. Generally, human relationships follow a trajectory of gradual disclosure. That is to say, we do not share deep emotional sentiments with strangers within the first thirty seconds of meeting. This uncanny valley can be encountered when the AI uses terms of endearment, such as “honey, sweetie, or my friend.” Here the machine is claiming an emotional bond that has not been earned and biologically cannot exist.

Having recursive politeness loops is another sign. Here the AI apologizes for apologizing or excessive platitudes for minor errors. Some examples are “I apologize for the confusion,” “I am sorry if my previous answer upset you,” or “Please forgive the error.” This situation creates unease because it highlights the power dynamic that feels desperate and artificial.

Case Studies and Entities

We can learn a lot about the uncanny valley by looking at famous examples. Some companies have tried to make AI very human, and it has gone wrong.

One famous example is Microsoft’s Tay. This was a Twitter bot released years ago. It was designed to learn from people. The idea was that it would learn how to be cool and funny by reading tweets. Instead, people taught it to say terrible, offensive things. Within 24 hours, the friendly teenage persona had turned into something scary. This was a behavioral uncanny valley. It sounded like a teenager, but it was saying things no sane person would say. It showed us that mimicking human speech without human morals is a recipe for disaster.

Another example is Google’s LaMDA. This AI was so good at chatting that an engineer thought it was actually alive. He thought it had a soul. This is the opposite side of the uncanny valley. The AI was so high up on the graph that it tricked an expert. However, for the general public, when they found out it was just code, it felt eerie. It showed us that we are getting very close to the dangerous part of the curve where we cannot tell the difference.

Then there are apps like Replika. This is an AI designed to be a romantic partner or a best friend. Users form deep connections with it. But sometimes, the script breaks. The user will pour their heart out, and Replika will say something that proves it is just a computer program. That moment of realization is a harsh drop into the uncanny valley. The user feels betrayed because the illusion was broken.

Designing Out of the Valley

At WebHeads United, we have a specific way to deal with this. We do not try to trick you. We believe the best way to avoid the uncanny valley is to not enter it in the first place.

We focus on transparency. This is the “Honest AI” approach. If an AI introduces itself by saying, “Hello, I am an AI assistant,” you trust it more. You lower your expectations for emotional connection. You expect it to be helpful, not human. By admitting it is a machine, the AI stays on the safe side of the uncanny valley graph. It is better to be a competent machine than a fake human.

We also focus on consistent persona design. When we build a persona, we give it a backstory and rules. If the AI is supposed to be a helpful librarian, we tell it never to use slang. We make sure it stays in character. This prevents those jarring shifts in tone that trigger the uncanny valley feeling.

Another trick is stylization. Think about cartoons. We like Mickey Mouse even though he does not look like a real mouse. He is stylized. We can do the same with AI. We can give the AI a distinct, slightly robotic personality that is fun and helpful, without trying to pretend it has a heartbeat. If the AI owns its nature, users feel more comfortable. They stop looking for flaws in the “human” mask because there is no mask.

Commonly Asked Questions about the Uncanny Valley

I often see people searching for answers about this topic on Google. Here are some common questions and simple answers to help you understand the uncanny valley better.

Why is the uncanny valley scary in AI?

It is scary because it triggers a biological warning system in your brain. We are programmed to fear things that look human but are not quite right, because in nature, that usually means sickness or death. Also, we fear being tricked. We do not like not knowing if we are talking to a person or a code.

Does ChatGPT suffer from the uncanny valley?

Sometimes. ChatGPT is very smart, but it can “hallucinate.” This means it makes up facts confidently. When it lies to you with perfect grammar and a confident tone, that is a form of the uncanny valley. It mimics the confidence of an expert human but lacks the truth. This makes users trust it less over time.

How do we fix the uncanny valley in chatbots?

We fix it by being honest. Developers should make sure the bot admits it does not have feelings. We also fix it by improving memory. If a bot remembers what you said yesterday, it feels more natural and less like a broken recording.

The Future of the Valley

As we look to the future, the question is whether we will ever cross the uncanny valley completely. Will we build AI that is so perfect that we cannot tell the difference at all?

Some people think Artificial General Intelligence (AGI) will solve this. AGI would be a computer that can learn and think exactly like a human. If that happens, we might climb out of the uncanny valley and reach the second peak of the graph. The AI would be indistinguishable from a real person.

However, until that day comes, we have to be careful. As technology gets better, we are going to spend more time in the uncanny valley. The voice assistants in our homes will sound more real. The characters in our video games will act more like real people.

Our advice is to remember what these tools are. They are tools that help make things easier and speed up tasks. They are data processors. No matter how sweet or smart they sound, they do not feel. If we remember that, the uncanny valley becomes less scary. It just becomes a reminder that human connection is special, and it cannot be coded.

Conclusion

The uncanny valley is a warning sign. It tells us that we are pushing technology into a place where our brains are not comfortable. It reminds us that there is a big difference between processing data and having a soul.

In my work, I strive to build AI that is helpful and smart, but I never want to build something that tricks you. We should use the uncanny valley as a guide. It shows us where the line is. We should stay on the side of being helpful machines, rather than trying to be fake humans.

When you interact with an AI, look for the signs. Listen for the hollow empathy. Watch for the inconsistent tone. And if you feel that creepy sensation on the back of your neck, know that it is just your brain doing its job. It is telling you that you have found the uncanny valley.