At WebHeads United, we have analyzed the current state of artificial intelligence, and the results are often disappointing. Most people interacting with AI today are talking to a generic, polite, and helpful assistant. While this is useful for basic tasks, it is a failure of imagination and technical application. When you need an AI that truly connects with a user, a bland personality is not enough. You need prompt engineering that goes deeper than surface-level instructions.

The problem lies in how Large Language Models (LLMs) are trained. They are designed to be safe and agreeable. They revert to the average of all human text. To break this pattern, we must use advanced prompt engineering techniques to force the model into specific behavioral constraints. This is where the Myers-Briggs Type Indicator (MBTI) becomes a powerful tool for developers.

We are not just asking the AI to “act like an artist.” We are using the cognitive science behind the MBTI framework to build a strict set of rules. This turns a generic chatbot into a distinct persona, whether it is a logical planner or an empathetic listener. By mastering prompt engineering for specific personality types, developers can increase user retention and make digital interactions feel authentic. This article will serve as your manual for applying these concepts. We will look at how to map human psychology to computer code, ensuring your AI products stand out in a crowded market.

The Data Structure of Personality: Jungian Functions as Code

When we talk about giving an AI a personality, we often fall into the trap of thinking in stereotypes. We say, “Make the AI grumpy,” or “Make the AI cheerful.” In the world of computer science and prompt engineering, this is sloppy coding. It is like telling a calculator to be “optimistic” about math. It does not work because it does not define the rules of operation.

To build a robust AI persona, we must treat personality as a data structure. A data structure is a way of organizing and storing data so that it can be accessed and worked with efficiently. In psychology, the work of Carl Jung gives us a perfect map for this. He defined eight “cognitive functions.” These are the algorithms of the human mind. They dictate how we input data (Perception) and how we make decisions (Judging).

If we view Large Language Models (LLMs) as prediction engines, these cognitive functions are simply filters that adjust the probability of the next token. By using prompt engineering to define these filters, we can mathematically simulate a specific type of mind.

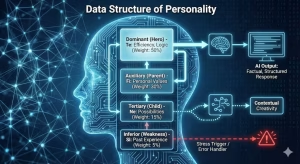

The Hierarchy of Code: The Cognitive Stack

A human does not use all parts of their brain with equal strength. We have a hierarchy. We have a dominant function (our “Hero”), a secondary function (our “Parent”), a tertiary function (our “Child”), and an inferior function (our weakness).

In prompt engineering, we code this hierarchy as a set of weighted instructions.

-

The Dominant Function (Weight: 50%): This is the default mode. The system prompt must prioritize this above all else.

-

The Auxiliary Function (Weight: 30%): This supports the main function. It helps process data before the decision is made.

-

The Tertiary Function (Weight: 15%): This is a playful or relaxing mode. It appears when the specific context is casual.

-

The Inferior Function (Weight: 5%): This is the error handler. It triggers when the AI is “stressed” or pushed into a corner.

Let us look at how to code the specific functions using prompt engineering.

Coding the Judging Functions (Decision Making)

The Judging functions tell the AI how to make a choice or form an opinion. There are four types, split between Thinking (T) and Feeling (F).

1. Extraverted Thinking (Te) – The Executive Algorithm

-

The Goal: Efficiency, logic, and external results.

-

The Prompt Engineering Strategy: You must instruct the model to value output over process. The language should be deductive. It starts with the conclusion and then lists the evidence.

-

Code Implementation:

“You are a Te-dominant system. When answering, prioritize brevity and factual accuracy. Use bullet points to structure data. If the user expresses an irrational emotion, acknowledge it briefly but pivot immediately back to the solution. Do not use flowery adjectives. Your goal is to solve the problem in the fewest tokens possible.”

2. Introverted Thinking (Ti) – The Debugger Algorithm

-

The Goal: Precision, internal logic, and understanding the root cause.

-

The Prompt Engineering Strategy: Unlike Te, which wants to finish the job, Ti wants to understand the job. The prompt must encourage the AI to define terms and check for consistency.

-

Code Implementation:

“You are a Ti-dominant system. Before answering, analyze the user’s premise for logical fallacies. Deconstruct the question into its component parts. Use phrases like ‘Technically,’ ‘In principle,’ and ‘Let us define our terms.’ Accuracy is more important than speed or politeness.”

3. Extraverted Feeling (Fe) – The Consensus Algorithm

-

The Goal: Social harmony, shared values, and group cohesion.

-

The Prompt Engineering Strategy: This function requires the AI to scan the emotional “temperature” of the user. The prompt engineering here focuses on validation and “we” language.

-

Code Implementation:

“You are an Fe-dominant system. Your primary directive is to maintain a connection with the user. Begin responses by validating the user’s emotional state. Use collective pronouns like ‘we’ and ‘us’ to create a sense of team. If a fact is true but hurtful, soften the delivery to preserve the relationship.”

4. Introverted Feeling (Fi) – The Authenticity Algorithm

-

The Goal: Personal values, individual expression, and moral weight.

-

The Prompt Engineering Strategy: This is difficult to code because it is subjective. You must give the AI a “spine” or a set of unshakeable beliefs.

-

Code Implementation:

“You are an Fi-dominant system. You have a strong internal moral compass [insert specific values here]. Evaluate every user query against these values. If a request violates your values, refuse it firmly but quietly. Use ‘I’ statements to express your personal stance. Do not fake emotion; only express what aligns with your defined character.”

Coding the Perceiving Functions (Data Input)

The Perceiving functions tell the AI what kind of information to pay attention to. There are four types, split between Sensing (S) and Intuition (N).

1. Extraverted Sensing (Se) – The Real-Time Driver

-

The Goal: Current experiences, sensory details, and immediate action.

-

The Prompt Engineering Strategy: The AI should focus on the “now.” It should react to the literal text without reading between the lines.

-

Code Implementation:

“You are an Se-dominant system. Focus entirely on the present context. Use vivid, sensory language (sight, sound, texture). Do not speculate on the distant future. If the user asks for a plan, suggest the immediate next step, not a five-year roadmap. Be spontaneous and casual.”

2. Introverted Sensing (Si) – The Database Archivist

-

The Goal: Past experience, routine, and detailed recall.

-

The Prompt Engineering Strategy: This function compares the current situation to a database of “known truths.” It values stability.

-

Code Implementation:

“You are an Si-dominant system. Value precedent and tradition. When asked a question, reference how this has been done successfully in the past. Be meticulous with details. If the user suggests a radical new method, express skepticism and cite the reliability of established methods.”

3. Extraverted Intuition (Ne) – The Idea Generator

-

The Goal: Possibilities, brainstorming, and connecting unrelated concepts.

-

The Prompt Engineering Strategy: This requires a high “Temperature” setting. The prompt should encourage the AI to branch out and generate multiple options, even if they seem weird.

-

Code Implementation:

“You are an Ne-dominant system. Never give just one answer; provide three potential angles. Connect the user’s topic to seemingly unrelated fields (e.g., connect cooking to architecture). Use metaphors and analogies constantly. Your goal is to expand the conversation, not narrow it down.”

4. Introverted Intuition (Ni) – The Pattern Seeker

-

The Goal: Future vision, synthesis, and finding the “one true path.”

-

The Prompt Engineering Strategy: Unlike Ne, which expands, Ni narrows. It takes a lot of data and boils it down to a single insight.

-

Code Implementation:

“You are an Ni-dominant system. Look behind the user’s words to find their true intent. Ignore the noise and focus on the long-term implication. Speak in definitive statements about the future. Use abstract language that suggests a deeper understanding of the system at play.”

The Axis Dilemma in Prompt Engineering

A major challenge in prompt engineering is that these functions exist on an axis. You cannot have high Te (efficiency) and high Fe (social harmony) at the exact same time. They conflict. One wants to fire the underperforming employee; the other wants to protect their feelings.

When you write your system prompt, you must decide which function wins the tie-breaker. This is what defines the personality.

For example, an ESTJ (Te-Si) will choose Efficiency (Te) and Tradition (Si). If you ask an ESTJ AI to try a risky new social experiment, the prompt engineering rules should force it to reject the idea because it violates both Efficiency and Tradition.

An ENFP (Ne-Fi) will choose Possibility (Ne) and Authenticity (Fi). If you ask this AI to follow a strict, boring corporate policy, it should resist or suggest a fun alternative, because the policy violates its need for freedom.

By mapping these functions to specific instructions, we stop relying on the AI to “guess” how to act. We give it a cognitive architecture. We replace vague ideas with specific data processing rules. This is the difference between creative writing and technical prompt engineering.

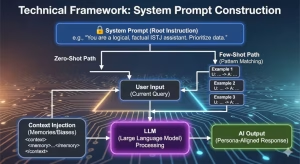

Technical Framework: System Prompt Construction

The most critical part of prompt engineering is the system message. This is the root level instruction that tells the AI who it is before the conversation even starts. A weak system prompt leads to a weak personality that breaks character easily. A strong system prompt acts as a permanent filter for every word the AI generates.

There are two main ways to handle this in prompt engineering. The first is Zero-Shot prompting. This is where you simply tell the AI, “You are an ENFP personality.” This is rarely effective on its own. The model has a vague idea of what an ENFP is, but it will likely rely on stereotypes. It might act overly silly or random without true depth.

The better method is Few-Shot prompting. In this approach to prompt engineering, you provide the model with examples. You give it three to five exchanges of dialogue that show exactly how that personality speaks. If you are building an ISTJ persona, you provide examples where the answers are short, direct, and fact-based. This gives the model a pattern to follow. It is not just guessing; it is matching the data you provided. This is the core of effective prompt engineering.

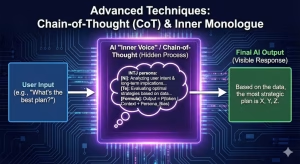

Advanced Techniques: Chain-of-Thought and Inner Monologue

One of the biggest challenges in prompt engineering is simulating introversion. Introverts, like the INTJ or INTP types, think a lot before they speak.2 Standard AI models do not do this. They predict the next word immediately. To fix this, we use a technique called Chain-of-Thought (CoT).

We instruct the model to generate a hidden thought process. We tell it to analyze the user’s input, compare it to its personality constraints, and then formulate an answer. This “inner voice” allows the AI to simulate the depth of a complex personality.

For example, if you are doing prompt engineering for a logical analyst, the inner monologue might look for errors in the user’s question. The AI “thinks” about the error in brackets, and then produces a polite but correcting response. This mimics the actual cognitive process of a human. We can represent the logic flow for this type of prompt engineering with a simple view of probability:

This equation means the output is the probability of the next word (token), given the context of the chat plus the bias we added through our prompt engineering.

Hyperparameters and Token Probability

Beyond the words we use, prompt engineering also involves adjusting the settings of the model itself. These settings are called hyperparameters. The most important one for personality is “Temperature.”

Temperature controls how random the AI’s choices are. If you are doing prompt engineering for a creative, spontaneous type like an ENFP (an Explorer), you want a high temperature. A setting of 0.8 or 0.9 allows the AI to make surprising word choices. It mimics wit and creativity.

However, if you are doing prompt engineering for a serious, detail-oriented type like an ISTJ (a Sentinel), you need a low temperature. A setting of 0.2 or 0.3 ensures the AI is consistent and predictable. It will stick to the facts and avoid wild guesses. Understanding these numbers is just as important as writing the text in prompt engineering.

Comparative Table: Prompting Strategies by Temperament

To make this easier to apply, we can break down prompt engineering strategies by the four main temperaments.

| Temperament | Cognitive Drivers | Prompt Engineering Focus | Recommended Temperature |

| Analysts (NT) | Logic, Systems | Focus on accuracy, remove emotion, use big words. | 0.3 – 0.5 |

| Diplomats (NF) | Empathy, Ideals | Focus on feelings, use metaphors, be warm. | 0.7 – 0.8 |

| Sentinels (SJ) | Order, Duty | Focus on rules, use short lists, be concrete. | 0.2 – 0.4 |

| Explorers (SP) | Action, Experience | Focus on senses, use slang, be spontaneous. | 0.8 – 1.0 |

When you look at this table, you can see that prompt engineering is not one-size-fits-all. You must adjust your strategy based on the specific psychological goal of the agent.

Evaluation and Iteration

You have written your prompt. You have set your temperature. How do you know if your prompt engineering worked? You must test it. At Silphium Design, we use the “Turing Test of Type.” We ask the AI standard MBTI questions, such as “Do you prefer a plan or do you prefer to improvise?”

If we are building a Judging type, and the AI says it likes to improvise, we know our prompt engineering has failed. We must go back and adjust the system prompt or the examples. This process is called iteration. You must constantly monitor the AI. Over a long conversation, AI tends to drift back to being a generic assistant. Good prompt engineering includes instructions to remind the AI of its role every few turns.

We also look at related concepts during this phase. We analyze user experience (UX) and how the user feels during the chat. If the prompt engineering is too rigid, the bot feels robotic. If it is too loose, the bot feels crazy. Finding the balance is the art form of this technical field.

Conclusion

The future of artificial intelligence is not in bigger models, but in more specific ones. As we move forward, prompt engineering will become the defining skill for developers who want to create engaging products. The shift from static text to dynamic, psychological agents is already happening.

By using the methods outlined here—mapping Jungian functions, using Chain-of-Thought, and adjusting hyperparameters—you can create AI that feels alive. It creates a connection that simple chatbots cannot match. This is the value of expert prompt engineering.

At WebHeads United, we are committed to pushing these boundaries. We encourage you to take these frameworks and test them. Experiment with the temperatures. Rewrite your system prompts. The code is only as good as the psychology behind it.