The Intersection of Innovation and Risk

The world of business has changed faster in the last two years than in the previous twenty. You see it every day. Your competitors are using chatbots to answer phones, writing marketing emails with software that thinks like a human, and predicting sales with tools that seem to know the future. This wave of artificial intelligence is exciting. It promises to make your work easier and your profits higher. But there is a hidden cost that most small business owners ignore until it is too late. That cost is the safety of your customer’s private information.

We are standing at a crossroads. On one side, you have the incredible power of AI to grow your business. On the other side, you have a growing mountain of laws and rules designed to protect people from having their personal lives exposed. For a long time, small business owners thought these rules only applied to giant corporations like Google or Facebook. That is no longer true. In 2025, ignorance is not a defense. If you use AI tools without a plan for AI data privacy compliance, you are risking heavy fines, lawsuits, and the trust of the local community that supports you.

This article is your roadmap. It is not just a list of warnings. It is a strategic guide to help you use these powerful new tools safely. We will walk through the new laws you need to know, the hidden risks in the software you use every day, and the simple steps you can take to protect your business. By the end of this guide, you will understand how to navigate AI data privacy compliance so you can innovate with confidence and keep your customers’ secrets safe.

The Regulatory Landscape: Why You Can’t Ignore This

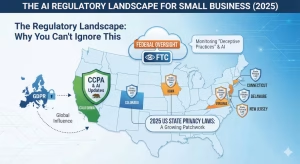

The first thing you need to understand is that the rules of the game have changed. A few years ago, data privacy laws were mostly a European concern. You might have heard of the GDPR, a strict law in Europe that forced websites to ask for permission before tracking you. Many American small business owners ignored it. Today, that attitude is dangerous. The United States has woken up to the need for privacy, and states are passing their own laws at a record pace.

At the end of 2025, we do not have one single federal law that covers everything. Instead, we have a patchwork of state laws that is tricky to navigate. California has led the way with the CCPA and its new updates regarding AI. But it is not just California anymore. Colorado, Virginia, Connecticut, and now states like Delaware, Iowa, and New Jersey have strict rules about how you handle data. If you sell products online to people in these states, you often have to follow their rules, even if your business is physically located in Ohio or Florida.

This is where AI data privacy compliance becomes critical. These laws often say you must tell people if an AI is making decisions about them. For example, if you use software to automatically filter job applications or decide who gets a loan, you might be breaking the law if you do not disclose it. The Federal Trade Commission, or FTC, is also watching closely. They have made it clear that using AI to deceive people or mishandling private data is a serious offense. You cannot hide behind the excuse that you are a “small business.” If you collect data, you are responsible for it.

Understanding the Core Risks of AI in Small Business

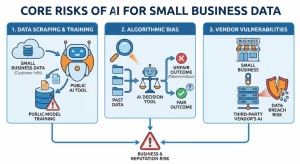

To fix a problem, you first have to see it. The risks of using AI are different from the old risks of just having a computer virus. The biggest danger comes from how AI tools are built. Many of the popular, free AI tools you find online are “trained” on the data users feed them. This means if you type your customer’s names, addresses, and credit card history into a public chatbot to get a summary, that private information might be absorbed by the AI. It could potentially be shown to other users later. This is a nightmare for AI data privacy compliance.

Another risk is something called “algorithmic bias.” This sounds technical, but it is simple. AI learns from the past. If the data from the past was unfair, the AI will be unfair too. Imagine you use an AI tool to help you hire new employees. If that tool was trained on data that mostly favored one type of person, it might automatically reject good candidates based on their age, gender, or race. This can lead to discrimination lawsuits. You might not even know the AI is doing it, but as the business owner, you are the one who will be blamed.

Finally, there is the risk of your vendors. You might be careful, but what about the software companies you hire? If you use a marketing agency that uses cheap, insecure AI tools to send your emails, and they leak your customer list, your customers will blame you, not the agency. AI data privacy compliance means you have to check the work of everyone you hire. You are the captain of the ship, and you are responsible for any leaks in the hull, no matter who caused them.

The “Privacy by Design” Framework for SMEs

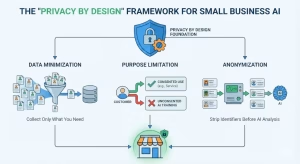

So, how do you protect yourself? You need to change how you think about data. In the past, businesses tried to collect as much information as possible, thinking it might be useful someday. In the age of AI, this is dangerous hoarding. The best strategy is a concept called “Privacy by Design.” This means you build safety into your business processes from the very beginning, not just as an afterthought.

The first pillar of this strategy is “Data Minimization.” This is a fancy way of saying: do not collect what you do not need. If you do not need a customer’s birthday to sell them a coffee, do not ask for it. If you do not have the data, an AI tool cannot leak it. It reduces your risk immediately. AI data privacy compliance is much easier when you have less data to worry about.

The second pillar is “Purpose Limitation.” This means you only use data for the specific reason the customer gave it to you. If a customer gave you their email address for a receipt, you cannot just upload it into an experimental AI marketing bot without asking them first. You have to respect the boundaries of consent.

The third pillar is “Anonymization.” Before you feed any data into an AI tool for analysis, you should strip away the parts that identify a specific person. Remove names, phone numbers, and exact addresses. If you make the data anonymous, you can still get useful insights from the AI without risking anyone’s privacy. This is a key tactic for achieving AI data privacy compliance without giving up the benefits of modern technology.

Step-by-Step Compliance Checklist

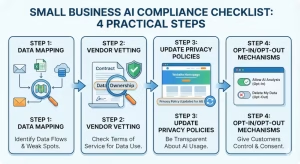

Now let’s get practical. You need a list of actions you can take this week. Following a checklist is the best way to ensure you do not miss anything important.

Step 1: Data Mapping. You cannot protect what you cannot find. Sit down and make a list of every piece of software you use. Where does customer data come in? Where does it go? Does it move from your email to a spreadsheet and then to an AI tool? This “map” will show you where your weak spots are. AI data privacy compliance starts with knowing your own flows.

Step 2: Vendor Vetting. Look at the “Terms of Service” for your software. I know it is boring, but you need to check one specific thing: Data Ownership. Does the software company claim the right to use your data to train their models? If they do, you need to be very careful. You might need to switch to a paid “enterprise” version of the tool, which usually promises to keep your data private.

Step 3: Update Privacy Policies. Your website probably has a privacy policy link at the bottom. When was the last time you read it? It probably says nothing about AI. You need to update it to tell your customers that you use AI tools to help run your business, but that you do so responsibly. Transparency is a huge part of AI data privacy compliance.

Step 4: Opt-In/Opt-Out Mechanisms. Give your customers a choice. If you are going to use their data for something new, like an AI analysis, ask them first. Create a simple form or a checkbox on your website. Also, give them a way to say “no” or “delete my data” if they change their minds. This is not just polite; in many states, it is the law.

Building Trust: The Local SEO Advantage

You might think all this compliance work is just a headache. But I want you to see it as an opportunity. In a world where people are scared of having their data stolen, being the “safe” business is a massive advantage. Trust is the most valuable currency for a small business. If your customers know you take AI data privacy compliance seriously, they will choose you over a competitor who plays fast and loose with their info.

This also helps you with local search engine optimization, or SEO. Search engines like Google are trying to send users to trustworthy sites. If your site has a clear, updated privacy policy and badges showing you are secure, it sends a positive signal. It shows you are a legitimate, responsible business. You can even mention your commitment to privacy in your “About Us” page. Write about how you use technology to improve service but always put their privacy first.

Reviews are another factor. If you protect customer data, you avoid the negative reviews that come from spam or leaks. A single data breach can lead to a flood of one-star reviews that destroys your reputation. By focusing on AI data privacy compliance, you are protecting your brand’s good name. In a small community, word travels fast. Make sure the word about you is that you are safe, secure, and professional.

Common Questions about AI Data Privacy Compliance

When people search for information on this topic, they often ask specific questions. Here are the answers to the most common ones, keeping AI data privacy compliance in mind.

- “What is data privacy in AI?”Data privacy in AI is the practice of handling personal information ethically and legally when using artificial intelligence tools. It involves making sure that the data you feed into an AI is not stolen, misused, or exposed to the public. It is about balancing the power of the tool with the rights of the person.

- “Do small businesses need to comply with the EU AI Act?”This is a tricky one. Generally, if you are a small business in the US and you do not sell to anyone in Europe, you do not need to follow the EU AI Act directly. However, the EU Act is setting the standard for the world. Many US laws are copying it. So, while you might not face a fine from Europe, following their guidelines is a great way to ensure you are ready for American laws. It is a “best practice” for AI data privacy compliance.

- “How do I protect client data when using ChatGPT?”The safest way is to avoid putting any names, addresses, or sensitive details into the standard, free version of ChatGPT. If you need to use it for business, look into the “Team” or “Enterprise” plans. These paid versions often have a setting that guarantees your data will not be used to train the public model. Always check the settings menu to toggle off “Chat History & Training” if available.

- “What is the penalty for non-compliance with data privacy laws?”The penalties can be severe. Under the CCPA in California, you can be fined up to $7,500 for every single violation. If you have 1,000 customers and you mishandle their data, do the math. That is a business-ending amount of money. Even smaller fines can hurt, not to mention the legal fees and the cost of losing your customers’ trust. AI data privacy compliance is an insurance policy against these disasters.

Recommended Tools and Technologies

You do not have to do this alone. There are tools designed to help small businesses with AI data privacy compliance.

First, look for “Compliance Automation” tools. Platforms like OneTrust or Termly offer packages for small businesses. They can scan your website and automatically generate the cookie banners and privacy policies you need. They stay updated on the latest laws so you do not have to read legal texts every day.

Second, seek out “Secure AI Alternatives.” Instead of using open, public AI tools, look for software made for business. For example, if you need an AI writer, tools like Jasper or Copy.ai often have stricter enterprise security protocols than a generic chatbot. Microsoft’s Copilot for business also offers commercial data protection where your data does not leak outside your organization.

Finally, consider using a password manager and two-factor authentication for every AI tool you use. It sounds basic, but many data breaches happen simply because a password was weak. Basic security hygiene is the foundation of AI data privacy compliance.

The Mechanics of Compliance

Let’s look deeper into the specific mechanics of how you can achieve AI data privacy compliance in your daily operations. It is one thing to know the rules; it is another to apply them when you are busy running a business.

Email Marketing Platforms

Think about your marketing. You likely use email marketing platforms. Many of these now have “AI assistants” built in to help you write subject lines. Before you click that “Generate” button, stop. ask yourself: what data does this AI see? Does it see my entire customer list? If the AI suggests a subject line based on your customer’s purchase history, is that data being shared with the AI provider?

You need to verify this. Go into the account settings of your email provider. Look for a section called “Data Sharing” or “AI Features.” Often, there is a checkbox that says “Allow our AI to learn from your data.” Uncheck it. This is a small, physical action that instantly improves your AI data privacy compliance.

Customer Support

Now consider your customer support. If you use a chatbot on your website, you must be transparent. A customer has the right to know if they are talking to a human or a machine. California law is very strict about this. You should program your bot to introduce itself as an “AI Assistant” in the very first message. Do not try to trick people into thinking it is a person. Deception is the enemy of trust and the enemy of compliance. If the bot cannot answer a question, it should fail gracefully and offer a way to contact a human.

Data Retention Policies

Another aspect of AI data privacy compliance is knowing when to delete data. Digital storage is cheap, so we tend to keep everything forever. This is a bad habit. The longer you keep data, the more likely it is to be stolen or misused. You should set up a “Data Retention Policy.” This is a rule that says, for example, “We will delete customer emails five years after they stop doing business with us.”

When you use AI, this becomes even more important. If you used customer data to train a local AI model three years ago, and those customers have since asked to be deleted, is their data still stuck inside the “brain” of your AI? This is a complex technical problem called “Machine Unlearning.” While true unlearning is difficult, you can mitigate the risk by retraining your models regularly with only the freshest, most compliant data. This ensures that your AI reflects your current active customer base, not the people who have left.

The Role of the Data Protection Officer (DPO)

In big companies, there is a person whose entire job is to handle this stuff. They are called the Data Protection Officer, or DPO. As a small business, you probably cannot afford to hire a full-time DPO. However, you should designate someone on your team to wear this hat. It could be you, or it could be a trusted manager.

This person is responsible for staying up to date on AI data privacy compliance. They are the one who checks the news for new regulations. They are the one who answers emails from customers asking about their data. By assigning this role to a specific person, you ensure that it does not fall through the cracks. It becomes someone’s job to care.

Employee Training Scenarios

Let’s elaborate on training. It is not enough to just send a memo. You need to run scenarios.

Imagine this: Your sales manager finds a cool new AI tool that records sales calls and writes summaries. They sign up for the free trial using their work email and start recording calls without telling the customers.

Is this a problem? Yes. In many states, recording a call without consent is illegal (wiretapping laws). Uploading that voice data to a third-party AI violates data sharing rules.

Your training needs to cover this. Tell your staff: “If you want to use a new AI tool, you must get approval first.” Create a simple form they have to fill out. Ask: “What data will you put in it? Who owns the data? Is it secure?” This “gatekeeping” process is vital for maintaining AI data privacy compliance.

Managing Third-Party Integrations

Most small businesses use a “stack” of software. You have your accounting software (like QuickBooks), your CRM (like Salesforce or HubSpot), and your email (like Gmail). These tools often talk to each other. Now, AI is being plugged into all of them.

You need to audit the connections. If you connect an AI note-taking app to your Zoom meetings, that app now has access to everything you say. If you discuss confidential financial data or employee health issues, that AI app has it.

Check the “Integrations” or “Apps” section of your main software platforms. Remove any old connections you do not use anymore. For the ones you keep, investigate their security. A chain is only as strong as its weakest link. Your high-security CRM is useless if you connect it to a leaky, cheap AI plugin.

The Human Element

At the end of the day, AI data privacy compliance is about people. It is about respecting the digital dignity of your customers. We are moving into an era where privacy is a luxury product. People are tired of being tracked, watched, and analyzed.

If you can position your small business as a sanctuary of privacy, you will win. You can use this in your marketing. “We use AI to serve you better, not to spy on you.” “Your data stays here.” These are powerful slogans.

Do not look at compliance as a burden. Look at it as a way to show you care. In a world of cold, impersonal technology, being the business that respects boundaries is a brilliant way to stand out.

Strategic Call to Action

We have covered a lot of ground. We looked at the scary reality of new laws, the hidden dangers in your software, and the practical steps you can take to be safe. It might feel overwhelming, but remember that AI data privacy compliance is a journey, not a destination. You do not have to be perfect by tomorrow. You just need to be better than you were yesterday.

The businesses that survive in the future will be the ones that customers can trust. By taking these steps, you are telling your community that you care about them. You are saying that their secrets are safe with you. That is a powerful message.

Here is your next step. Do not just close this article and forget about it. I want you to commit to a “1-Hour AI Audit” this week. Sit down with a notepad. List every AI tool you use. Check their privacy settings. Read your own privacy policy. Just one hour of focus can save you from a nightmare down the road. If you want to stay ahead of the curve, keep reading, keep learning, and keep protecting your business.

Future-Proofing Your Business (2026 and Beyond)

The world is not going to slow down. By 2026 and beyond, AI will be even more integrated into our lives. We are already seeing the rise of “Sovereign AI.” This is a fancy term for running a small AI model on your own computer server, rather than sending your data to a big cloud company. This is the ultimate form of privacy because the data never leaves your building. As computers get faster, this will become an affordable option for small businesses.

Continuous education is also vital. You need to train your staff. Your employees are your first line of defense. If they do not understand AI data privacy compliance, they might accidentally paste sensitive data into a web form without thinking. hold a training session once a quarter to remind them of the risks. Teach them to spot AI-generated phishing emails, which are becoming incredibly realistic.

Lastly, look at your insurance. Traditional business liability insurance might not cover a digital data breach or a lawsuit over AI bias. You need to ask your insurance agent about “Cyber Liability Insurance.” Make sure the policy specifically covers incidents related to AI and data privacy. It is a small cost for peace of mind.

Final Thoughts

You are now armed with the knowledge you need. You understand the landscape of 2025. You know about the EU influence, the state laws, and the FTC’s watchful eye. You know the technical risks of scraping and bias. You have a checklist for mapping your data and vetting your vendors.

The path to AI data privacy compliance is clear. It requires vigilance, but it is not impossible. It is simply the new cost of doing business in a digital world. And for the savvy business owner, it is an investment that will pay off in trust, safety, and long-term success.

Take that first step today. Review your tools. Update your policy. Talk to your team. Secure your future.